Stella Bounareli

I am currently a researcher at Meta AI, working in the Behavioural Computting

team on generative modeling and facial animation.

In February 2024 I received my PhD degree from the School of Computer Science and Mathematics of Kingston University of London (UK),

under the supervision of Prof. Vasileios Argyriou and Prof. Georgios Tzimiropoulos. My research focused on facial analysis and manipulation using generative models, i.e.,

Generative Adversarial Networks (GANs) and Diffusion Probabilistic Models (DPMs).

Before my PhD degree, I was working as a Research Assistant at the Center for Research and Technology Hellas CERTH (Greece).

My research was mainly focused on 3D object inspection algorithms, and on object and face detection/tracking on videos.

I obtained my Integrated Masters degree from the ECE Department of Aristotle University of Thessaloniki (Greece), where I did my dissertation,

entitled "Recognition of salient locations and creation of a location timeline by using GPS data", under the direction of Prof. Anastasios Delopoulos.

Publications

-

DiffusionAct: Controllable Diffusion Autoencoder for One-shot Face Reenactment

DiffusionAct: Controllable Diffusion Autoencoder for One-shot Face Reenactment

Paper Project Page Source Code

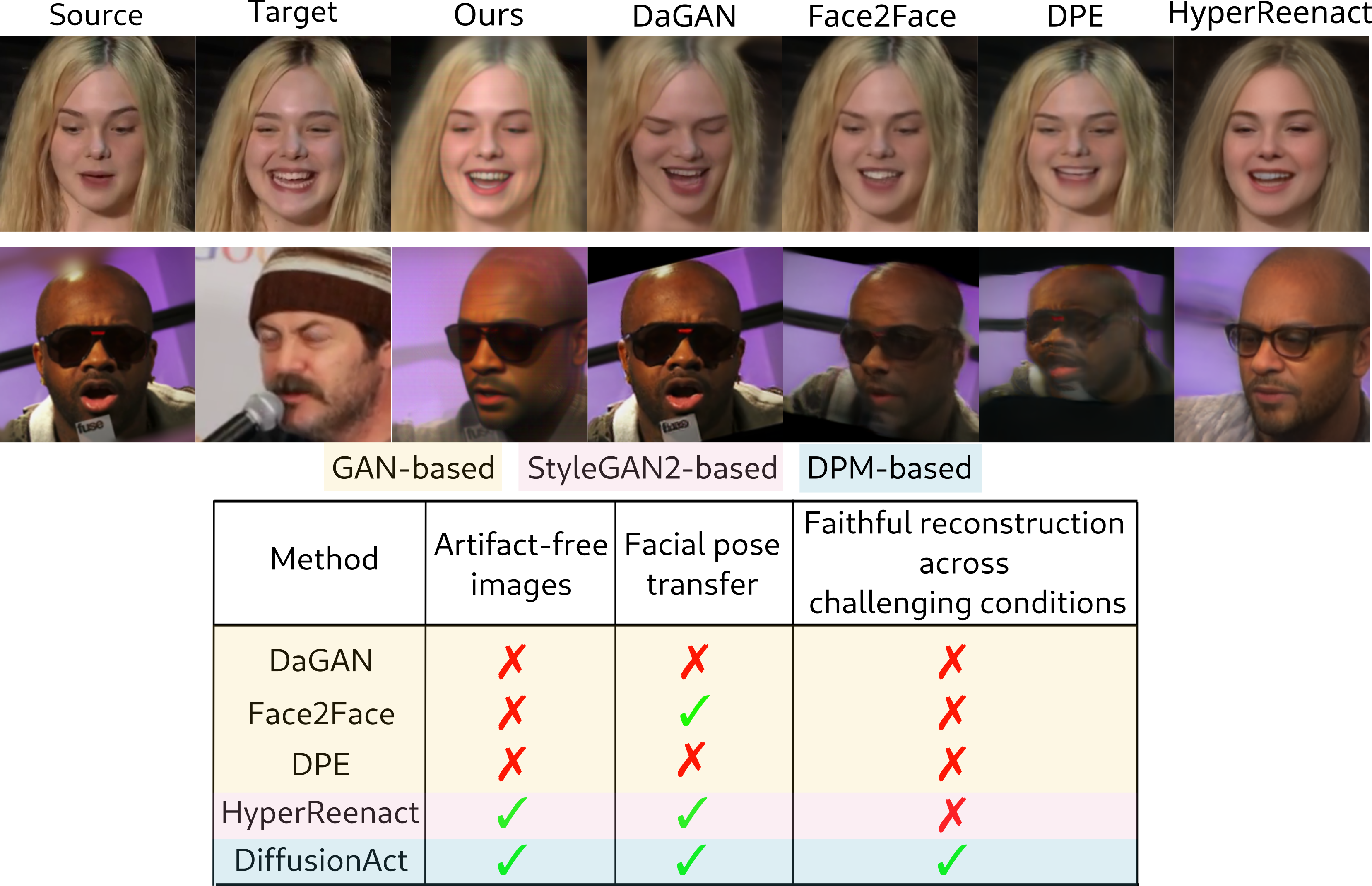

In this paper, we present our method, called DiffusionAct, that aims to generate realistic talking head images of a source identity, driven by a target facial pose using a pre-trained Diffusion Probabilistic Model (DPM). Existing GAN-based methods suffer from either distortions and visual artifacts or poor reconstruction quality, i.e., the background and several important appearance details, such as hair style/color, glasses and accessories, are not faithfully reconstructed. Recent advances in DPMs enable the generation of high-quality realistic images. To this end, we present a novel method that leverages the photo-realistic image generation of diffusion models to perform neural face reenactment. Specifically, we propose to control the semantic space of a Diffusion Autoencoder (DiffAE), in order to edit the facial pose of the input images, defined as the head pose orientation and the facial expressions. Our method allows one-shot, self, and cross-subject reenactment, without requiring subject-specific fine-tuning.

-

One-Shot Neural Face Reenactment via Finding Directions in GAN’s Latent Space International Journal of Computer Vision (IJCV)

Paper

In this paper, we present our framework for neural face/head reenactment whose goal is to transfer the 3D head orientation and expression of a target face to a source face. Previous methods focus on learning embedding networks for identity and head pose/expression disentanglement which proves to be a rather hard task, degrading the quality of the generated images. We take a different approach, bypassing the training of such networks, by using (fine-tuned) pre-trained GANs which have been shown capable of producing high-quality facial images. We show that by embedding real images in the GAN latent space, our method can be successfully used for the reenactment of real-world faces. Our method features several favorable properties including using a single source image (one-shot) and enabling cross-person reenactment.

-

HyperReenact: One-Shot Reenactment via Jointly Learning to Refine and Retarget Faces IEEE/CVF International Conference on Computer Vision (ICCV), 2023

Paper Project Page Source Code

In this paper, we present our method, called HyperReenact, that aims to generate realistic talking head images of a source identity, driven by a target facial pose. Existing state-of-the-art face reenactment methods train controllable generative models that learn to synthesize realistic facial images, yet producing reenacted faces that are prone to significant visual artifacts, especially under the challenging condition of extreme head pose changes, or requiring expensive few-shot fine-tuning. We propose to address these limitations by learning a hypernetwork to perform: (i) refinement of the source identity characteristics and (ii) facial pose re-targeting. Our method operates under the one-shot setting (i.e., using a single source frame) and allows for cross-subject reenactment, without requiring any subject-specific fine-tuning.

-

StyleMask: Disentangling the Style Space of StyleGAN2 for Neural Face Reenactment 17th IEEE conference on Automatic Face and Gesture Recognition (FG), 2023

StyleMask: Disentangling the Style Space of StyleGAN2 for Neural Face Reenactment 17th IEEE conference on Automatic Face and Gesture Recognition (FG), 2023

Paper Source Code

In this paper we present a method that using unpaired randomly generated facial images, learns to disentangle the identity characteristics of a human face from its pose by incorporating the recently introduced style space S of StyleGAN2, a latent representation space that exhibits remarkable disentanglement properties. By capitalizing on this, we learn to successfully mix a pair of source and target style codes using supervision from a 3D model. The resulting latent code, that is subsequently used for reenactment, consists of latent units corresponding to the facial pose of the target only and of units corresponding to the identity of the source only, leading to notable improvement in the reenactment performance compared to recent state-of-the-art methods.

-

Finding Directions in GAN's Latent Space for Neural Face ReenactmentAccepted as oral presentation in:

33rd British Machine Vision Conference (BMVC), 2022

Paper Project Page Source Code

This paper is on face/head reenactment where the goal is to transfer the facial pose (3D head orientation and expression) of a target face to a source face. We introduce a new method for neural face reenactment, by leveraging the high quality image generation of pretrained generative models (GANs) and the disentangled properties of 3D Morphable Models (3DMM). The core of our approach is a method to discover which directions in latent GAN space are responsible for controlling facial pose and expression variations.

2024

2023

2022

Work Experience

Jan. 2024 - now |

Researcher at Meta AI |

2022 - 2023 |

Teaching assistant on MSc programme modules at Kingston Univeristy, London (CI7520: Machine Learning and Artificial Intelligence) |

Jan. 2018 - June 2020 |

Research Assistant at Center for Research and Technology Hellas, CERTH (Greece). Participated in: |